低スペックPCでローカルLLMを動かしてみる

世の中、どこを向いてもAIの話題です。

貧乏人には関係ない…なんてことはなく、低スペックPCでもローカルLLMを動かしてみることはできるようなので、ちょっとトライしてみました。

こちらは、実用的な話題としてではなく半分ジョークという感じで読んでもらえればと思います。そのため、技術的な話題ではなく「低スペックPCでローカルLLMを動かしたぜ」という作業メモといった内容です。

インストール環境

まずは、今回の低スペックPCのCPU情報の確認です。

こんなCPUで動かすなんて正気か?って感じです。

$ lscpu

アーキテクチャ: x86_64

CPU 操作モード: 32-bit, 64-bit

アドレスサイズ: 36 bits physical, 48 bits virtual

バイト順序: Little Endian

CPU: 4

オンラインになっている CPU のリスト: 0-3

ベンダー ID: GenuineIntel

モデル名: Intel(R) Core(TM) i5 CPU M 460 @ 2

.53GHz

CPU ファミリー: 6

モデル: 37

コアあたりのスレッド数: 2

ソケットあたりのコア数: 2

ソケット数: 1

ステッピング: 5

周波数ブースト: 使用する

CPU スケーリング MHz: 54%

CPU 最大 MHz: 2534.0000

CPU 最小 MHz: 1199.0000

BogoMIPS: 5053.79

フラグ: fpu vme de pse tsc msr pae mce cx8 apic s

ep mtrr pge mca cmov pat pse36 clflush dt

s acpi mmx fxsr sse sse2 ht tm pbe syscal

l nx rdtscp lm constant_tsc arch_perfmon

pebs bts rep_good nopl xtopology nonstop_

tsc cpuid aperfmperf pni dtes64 monitor d

s_cpl vmx est tm2 ssse3 cx16 xtpr pdcm pc

id sse4_1 sse4_2 popcnt lahf_lm pti ssbd

ibrs ibpb stibp tpr_shadow flexpriority e

pt vpid dtherm ida arat vnmi flush_l1d

仮想化機能:

仮想化: VT-x

キャッシュ (合計):

L1d: 64 KiB (2 インスタンス)

L1i: 64 KiB (2 インスタンス)

L2: 512 KiB (2 インスタンス)

L3: 3 MiB (1 インスタンス)

NUMA:

NUMA ノード数: 1

NUMA ノード 0 CPU: 0-3

脆弱性:

Gather data sampling: Not affected

Indirect target selection: Not affected

Itlb multihit: KVM: Mitigation: VMX disabled

L1tf: Mitigation; PTE Inversion; VMX conditiona

l cache flushes, SMT vulnerable

Mds: Vulnerable: Clear CPU buffers attempted,

no microcode; SMT vulnerable

Meltdown: Mitigation; PTI

Mmio stale data: Unknown: No mitigations

Reg file data sampling: Not affected

Retbleed: Not affected

Spec rstack overflow: Not affected

Spec store bypass: Mitigation; Speculative Store Bypass disa

bled via prctl

Spectre v1: Mitigation; usercopy/swapgs barriers and

__user pointer sanitization

Spectre v2: Mitigation; Retpolines; IBPB conditional;

IBRS_FW; STIBP conditional; RSB filling;

PBRSB-eIBRS Not affected; BHI Not affect

ed

Srbds: Not affected

Tsa: Not affected

Tsx async abort: Not affected

Vmscape: Not affected

CPUのモデル名はIntel Core i5-460M なので第1世代でした。

CPUのみであれば、AVX512命令セットに対応したCPUが推奨されるようです。

Intelでは必ずしも最新のCoreを選ぶと良いわけではなく、一部のCoreなどではAVX512が非対応となっているケースもあるので注意が必要です。

AVX2であれば、Intel 第4世代以降、AMDRyzenシリーズ全般となります。

次はメモリです。

$ free -h

total used free shared buff/cache available

Mem: 7.6Gi 1.6Gi 2.3Gi 66Mi 4.0Gi 6.0Gi

Swap: 2.0Gi 0B 2.0Gi

メモリはおよそ8GB、多いほど良いけど、今回の動作環境はまぁこんな感じです。

Pythonの仮想設定

いつものようにPyhtonの仮想環境を設定します。

ちなみにOSはこんな感じです。

$ cat /etc/os-release

PRETTY_NAME="Ubuntu 24.04.4 LTS"

NAME="Ubuntu"

VERSION_ID="24.04"

VERSION="24.04.4 LTS (Noble Numbat)"

VERSION_CODENAME=noble

ID=ubuntu

ID_LIKE=debian

HOME_URL="https://www.ubuntu.com/"

SUPPORT_URL="https://help.ubuntu.com/"

BUG_REPORT_URL="https://bugs.launchpad.net/ubuntu/"

PRIVACY_POLICY_URL="https://www.ubuntu.com/legal/terms-and-policies/privacy-policy"

UBUNTU_CODENAME=noble

LOGO=ubuntu-logoでは、実際に環境設定を行います。

$ mkdir llama

$ cd llama

$ python3 -m venv .venv

$ source .venv/bin/activate

llama-cpp-pythonの導入

今回の主役llama-cpp-pythonを導入します。

llama-cpp-python は必要最小限のスペックなので今回の実験にはピッタリかと思います。

$ pip install llama-cpp-python必要な環境はPython 3.8以上、Cコンパイラとしてgccまたはclangが必要です。当然、これらは導入済みで実行しています。

また、「Installation Configuration」を参考にすると、基本的な CPU サポートを備えた事前構築済みホイールをインストールして利用できるようです。

その場合は、

$ pip install llama-cpp-python \

--extra-index-url https://abetlen.github.io/llama-cpp-python/whl/cpuという風に実行します。

モデルの導入

Hugging Faceのウェブサイト から任意のモデルを入手します。

今回は、GoogleのLLM Gemma2の日本語版(2Bモデル)を入手しました。

Googleさんのgemma-2-2b-jpn-itを量子化したものをalfredplpl氏が提供中なのでこちらを拝借します。

ブラウザでこちらからgemma-2-2b-jpn-it-Q4_K_M.ggufのダウンロードが可能です。

https://huggingface.co/alfredplpl/gemma-2-2b-jpn-it-gguf/tree/main

サイズは1.59Gでした。

直接ダウンロードする場合はこんな感じです。

$ wget https://huggingface.co/alfredplpl/gemma-2-2b-jpn-it-gguf/resolve/main/gemma-2-2b-jpn-it-Q4_K_M.ggufサイズもそれほど大きくないのでサクッとダウンロードが終わるかと思います。

動作確認

それでは、さっそく動作確認をしてみます。

$ python3 -m llama_cpp.server --model ./gemma-2-2b-jpn-it-Q4_K_M.gguf --host 0.0.0.0 --port 8000

:

ModuleNotFoundError: No module named 'uvicorn'

私の環境では幾つかモジュールが足りないようです。実行するたびに必要なモジュールを追加していきます。

最終的にはこんな感じ

$ pip install uvicorn anyio starlette fastapi sse_starlette starlette_context pydantic_settings無事に起動出来たら

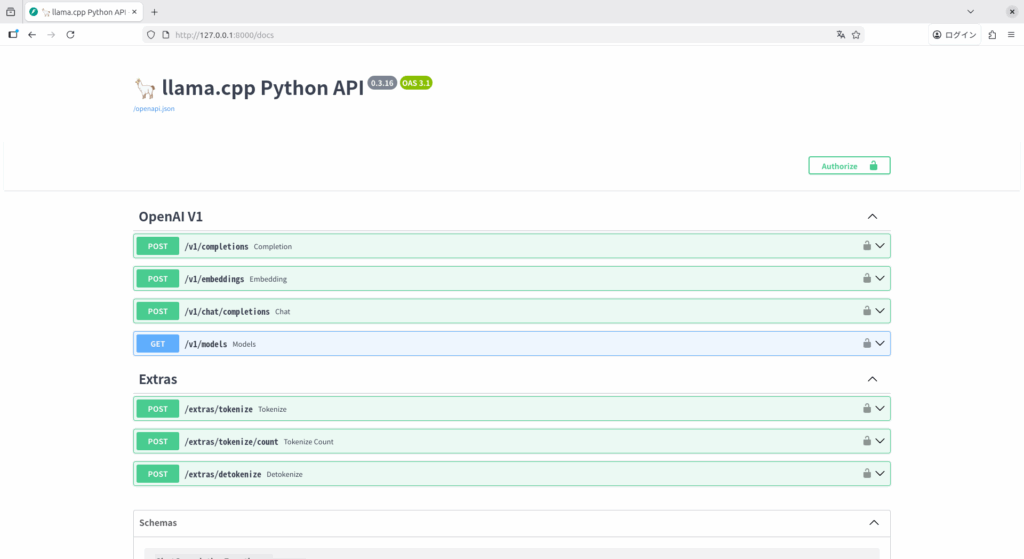

http://127.0.0.1:8000/docs

にアクセスしてみます。

こんな画面が表示されれば動作OKです。

アプリケーションからのアクセス

今度は、Pythonで実際にコードを書いて動かしてみたいと思います。

from llama_cpp import Llama

# ダウンロードしたモデルへのパスを指定

llm = Llama(model_path="./gemma-2-2b-jpn-it-Q4_K_M.gguf")

output = llm("こんにちは、", max_tokens=32, stop=["\n"], echo=True)

print(output)実行してみます。

$ python llama_test.py

llama_model_loader: loaded meta data with 40 key-value pairs and 288 tensors from ./gemma-2-2b-jpn-it-Q4_K_M.gguf (version GGUF V3 (latest))

llama_model_loader: Dumping metadata keys/values. Note: KV overrides do not apply in this output.

llama_model_loader: - kv 0: general.architecture str = gemma2

llama_model_loader: - kv 1: general.type str = model

llama_model_loader: - kv 2: general.name str = Gemma 2 2b Jpn It

llama_model_loader: - kv 3: general.finetune str = jpn-it

llama_model_loader: - kv 4: general.basename str = gemma-2

llama_model_loader: - kv 5: general.size_label str = 2B

llama_model_loader: - kv 6: general.license str = gemma

llama_model_loader: - kv 7: general.base_model.count u32 = 1

llama_model_loader: - kv 8: general.base_model.0.name str = Gemma 2 2b It

llama_model_loader: - kv 9: general.base_model.0.organization str = Google

llama_model_loader: - kv 10: general.base_model.0.repo_url str = https://huggingface.co/google/gemma-2...

llama_model_loader: - kv 11: general.tags arr[str,2] = ["conversational", "text-generation"]

llama_model_loader: - kv 12: general.languages arr[str,1] = ["ja"]

llama_model_loader: - kv 13: gemma2.context_length u32 = 8192

llama_model_loader: - kv 14: gemma2.embedding_length u32 = 2304

llama_model_loader: - kv 15: gemma2.block_count u32 = 26

llama_model_loader: - kv 16: gemma2.feed_forward_length u32 = 9216

llama_model_loader: - kv 17: gemma2.attention.head_count u32 = 8

llama_model_loader: - kv 18: gemma2.attention.head_count_kv u32 = 4

llama_model_loader: - kv 19: gemma2.attention.layer_norm_rms_epsilon f32 = 0.000001

llama_model_loader: - kv 20: gemma2.attention.key_length u32 = 256

llama_model_loader: - kv 21: gemma2.attention.value_length u32 = 256

llama_model_loader: - kv 22: general.file_type u32 = 15

llama_model_loader: - kv 23: gemma2.attn_logit_softcapping f32 = 50.000000

llama_model_loader: - kv 24: gemma2.final_logit_softcapping f32 = 30.000000

llama_model_loader: - kv 25: gemma2.attention.sliding_window u32 = 4096

llama_model_loader: - kv 26: tokenizer.ggml.model str = llama

llama_model_loader: - kv 27: tokenizer.ggml.pre str = default

llama_model_loader: - kv 28: tokenizer.ggml.tokens arr[str,256000] = ["<pad>", "<eos>", "<bos>", "<unk>", ...

llama_model_loader: - kv 29: tokenizer.ggml.scores arr[f32,256000] = [-1000.000000, -1000.000000, -1000.00...

llama_model_loader: - kv 30: tokenizer.ggml.token_type arr[i32,256000] = [3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, ...

llama_model_loader: - kv 31: tokenizer.ggml.bos_token_id u32 = 2

llama_model_loader: - kv 32: tokenizer.ggml.eos_token_id u32 = 1

llama_model_loader: - kv 33: tokenizer.ggml.unknown_token_id u32 = 3

llama_model_loader: - kv 34: tokenizer.ggml.padding_token_id u32 = 0

llama_model_loader: - kv 35: tokenizer.ggml.add_bos_token bool = true

llama_model_loader: - kv 36: tokenizer.ggml.add_eos_token bool = false

llama_model_loader: - kv 37: tokenizer.chat_template str = {{ bos_token }}{% if messages[0]['rol...

llama_model_loader: - kv 38: tokenizer.ggml.add_space_prefix bool = false

llama_model_loader: - kv 39: general.quantization_version u32 = 2

llama_model_loader: - type f32: 105 tensors

llama_model_loader: - type q4_K: 156 tensors

llama_model_loader: - type q6_K: 27 tensors

print_info: file format = GGUF V3 (latest)

print_info: file type = Q4_K - Medium

print_info: file size = 1.59 GiB (5.21 BPW)

init_tokenizer: initializing tokenizer for type 1

load: control token: 45 '<unused38>' is not marked as EOG

load: control token: 74 '<unused67>' is not marked as EOG

load: control token: 55 '<unused48>' is not marked as EOG

load: control token: 99 '<unused92>' is not marked as EOG

load: control token: 102 '<unused95>' is not marked as EOG

load: control token: 44 '<unused37>' is not marked as EOG

load: control token: 26 '<unused19>' is not marked as EOG

load: control token: 42 '<unused35>' is not marked as EOG

load: control token: 92 '<unused85>' is not marked as EOG

load: control token: 90 '<unused83>' is not marked as EOG

load: control token: 106 '<start_of_turn>' is not marked as EOG

load: control token: 88 '<unused81>' is not marked as EOG

load: control token: 5 '<2mass>' is not marked as EOG

load: control token: 104 '<unused97>' is not marked as EOG

load: control token: 68 '<unused61>' is not marked as EOG

load: control token: 94 '<unused87>' is not marked as EOG

load: control token: 59 '<unused52>' is not marked as EOG

load: control token: 2 '<bos>' is not marked as EOG

load: control token: 25 '<unused18>' is not marked as EOG

load: control token: 93 '<unused86>' is not marked as EOG

load: control token: 95 '<unused88>' is not marked as EOG

load: control token: 76 '<unused69>' is not marked as EOG

load: control token: 97 '<unused90>' is not marked as EOG

load: control token: 56 '<unused49>' is not marked as EOG

load: control token: 81 '<unused74>' is not marked as EOG

load: control token: 13 '<unused6>' is not marked as EOG

load: control token: 51 '<unused44>' is not marked as EOG

load: control token: 47 '<unused40>' is not marked as EOG

load: control token: 8 '<unused1>' is not marked as EOG

load: control token: 103 '<unused96>' is not marked as EOG

load: control token: 75 '<unused68>' is not marked as EOG

load: control token: 79 '<unused72>' is not marked as EOG

load: control token: 39 '<unused32>' is not marked as EOG

load: control token: 49 '<unused42>' is not marked as EOG

load: control token: 41 '<unused34>' is not marked as EOG

load: control token: 34 '<unused27>' is not marked as EOG

load: control token: 6 '[@BOS@]' is not marked as EOG

load: control token: 40 '<unused33>' is not marked as EOG

load: control token: 33 '<unused26>' is not marked as EOG

load: control token: 86 '<unused79>' is not marked as EOG

load: control token: 43 '<unused36>' is not marked as EOG

load: control token: 35 '<unused28>' is not marked as EOG

load: control token: 32 '<unused25>' is not marked as EOG

load: control token: 28 '<unused21>' is not marked as EOG

load: control token: 19 '<unused12>' is not marked as EOG

load: control token: 67 '<unused60>' is not marked as EOG

load: control token: 9 '<unused2>' is not marked as EOG

load: control token: 52 '<unused45>' is not marked as EOG

load: control token: 16 '<unused9>' is not marked as EOG

load: control token: 98 '<unused91>' is not marked as EOG

load: control token: 80 '<unused73>' is not marked as EOG

load: control token: 71 '<unused64>' is not marked as EOG

load: control token: 36 '<unused29>' is not marked as EOG

load: control token: 0 '<pad>' is not marked as EOG

load: control token: 11 '<unused4>' is not marked as EOG

load: control token: 70 '<unused63>' is not marked as EOG

load: control token: 77 '<unused70>' is not marked as EOG

load: control token: 64 '<unused57>' is not marked as EOG

load: control token: 50 '<unused43>' is not marked as EOG

load: control token: 20 '<unused13>' is not marked as EOG

load: control token: 73 '<unused66>' is not marked as EOG

load: control token: 23 '<unused16>' is not marked as EOG

load: control token: 38 '<unused31>' is not marked as EOG

load: control token: 21 '<unused14>' is not marked as EOG

load: control token: 15 '<unused8>' is not marked as EOG

load: control token: 37 '<unused30>' is not marked as EOG

load: control token: 14 '<unused7>' is not marked as EOG

load: control token: 30 '<unused23>' is not marked as EOG

load: control token: 62 '<unused55>' is not marked as EOG

load: control token: 3 '<unk>' is not marked as EOG

load: control token: 18 '<unused11>' is not marked as EOG

load: control token: 22 '<unused15>' is not marked as EOG

load: control token: 66 '<unused59>' is not marked as EOG

load: control token: 65 '<unused58>' is not marked as EOG

load: control token: 10 '<unused3>' is not marked as EOG

load: control token: 105 '<unused98>' is not marked as EOG

load: control token: 87 '<unused80>' is not marked as EOG

load: control token: 100 '<unused93>' is not marked as EOG

load: control token: 63 '<unused56>' is not marked as EOG

load: control token: 31 '<unused24>' is not marked as EOG

load: control token: 58 '<unused51>' is not marked as EOG

load: control token: 84 '<unused77>' is not marked as EOG

load: control token: 61 '<unused54>' is not marked as EOG

load: control token: 1 '<eos>' is not marked as EOG

load: control token: 60 '<unused53>' is not marked as EOG

load: control token: 91 '<unused84>' is not marked as EOG

load: control token: 83 '<unused76>' is not marked as EOG

load: control token: 85 '<unused78>' is not marked as EOG

load: control token: 27 '<unused20>' is not marked as EOG

load: control token: 96 '<unused89>' is not marked as EOG

load: control token: 72 '<unused65>' is not marked as EOG

load: control token: 53 '<unused46>' is not marked as EOG

load: control token: 82 '<unused75>' is not marked as EOG

load: control token: 7 '<unused0>' is not marked as EOG

load: control token: 4 '<mask>' is not marked as EOG

load: control token: 101 '<unused94>' is not marked as EOG

load: control token: 78 '<unused71>' is not marked as EOG

load: control token: 89 '<unused82>' is not marked as EOG

load: control token: 69 '<unused62>' is not marked as EOG

load: control token: 54 '<unused47>' is not marked as EOG

load: control token: 57 '<unused50>' is not marked as EOG

load: control token: 12 '<unused5>' is not marked as EOG

load: control token: 48 '<unused41>' is not marked as EOG

load: control token: 17 '<unused10>' is not marked as EOG

load: control token: 24 '<unused17>' is not marked as EOG

load: control token: 46 '<unused39>' is not marked as EOG

load: control token: 29 '<unused22>' is not marked as EOG

load: special_eos_id is not in special_eog_ids - the tokenizer config may be incorrect

load: printing all EOG tokens:

load: - 1 ('<eos>')

load: - 107 ('<end_of_turn>')

load: special tokens cache size = 249

load: token to piece cache size = 1.6014 MB

print_info: arch = gemma2

print_info: vocab_only = 0

print_info: n_ctx_train = 8192

print_info: n_embd = 2304

print_info: n_layer = 26

print_info: n_head = 8

print_info: n_head_kv = 4

print_info: n_rot = 256

print_info: n_swa = 4096

print_info: is_swa_any = 1

print_info: n_embd_head_k = 256

print_info: n_embd_head_v = 256

print_info: n_gqa = 2

print_info: n_embd_k_gqa = 1024

print_info: n_embd_v_gqa = 1024

print_info: f_norm_eps = 0.0e+00

print_info: f_norm_rms_eps = 1.0e-06

print_info: f_clamp_kqv = 0.0e+00

print_info: f_max_alibi_bias = 0.0e+00

print_info: f_logit_scale = 0.0e+00

print_info: f_attn_scale = 6.2e-02

print_info: n_ff = 9216

print_info: n_expert = 0

print_info: n_expert_used = 0

print_info: causal attn = 1

print_info: pooling type = 0

print_info: rope type = 2

print_info: rope scaling = linear

print_info: freq_base_train = 10000.0

print_info: freq_scale_train = 1

print_info: n_ctx_orig_yarn = 8192

print_info: rope_finetuned = unknown

print_info: model type = 2B

print_info: model params = 2.61 B

print_info: general.name = Gemma 2 2b Jpn It

print_info: vocab type = SPM

print_info: n_vocab = 256000

print_info: n_merges = 0

print_info: BOS token = 2 '<bos>'

print_info: EOS token = 1 '<eos>'

print_info: EOT token = 107 '<end_of_turn>'

print_info: UNK token = 3 '<unk>'

print_info: PAD token = 0 '<pad>'

print_info: LF token = 227 '<0x0A>'

print_info: EOG token = 1 '<eos>'

print_info: EOG token = 107 '<end_of_turn>'

print_info: max token length = 48

load_tensors: loading model tensors, this can take a while... (mmap = true)

load_tensors: layer 0 assigned to device CPU, is_swa = 1

load_tensors: layer 1 assigned to device CPU, is_swa = 0

load_tensors: layer 2 assigned to device CPU, is_swa = 1

load_tensors: layer 3 assigned to device CPU, is_swa = 0

load_tensors: layer 4 assigned to device CPU, is_swa = 1

load_tensors: layer 5 assigned to device CPU, is_swa = 0

load_tensors: layer 6 assigned to device CPU, is_swa = 1

load_tensors: layer 7 assigned to device CPU, is_swa = 0

load_tensors: layer 8 assigned to device CPU, is_swa = 1

load_tensors: layer 9 assigned to device CPU, is_swa = 0

load_tensors: layer 10 assigned to device CPU, is_swa = 1

load_tensors: layer 11 assigned to device CPU, is_swa = 0

load_tensors: layer 12 assigned to device CPU, is_swa = 1

load_tensors: layer 13 assigned to device CPU, is_swa = 0

load_tensors: layer 14 assigned to device CPU, is_swa = 1

load_tensors: layer 15 assigned to device CPU, is_swa = 0

load_tensors: layer 16 assigned to device CPU, is_swa = 1

load_tensors: layer 17 assigned to device CPU, is_swa = 0

load_tensors: layer 18 assigned to device CPU, is_swa = 1

load_tensors: layer 19 assigned to device CPU, is_swa = 0

load_tensors: layer 20 assigned to device CPU, is_swa = 1

load_tensors: layer 21 assigned to device CPU, is_swa = 0

load_tensors: layer 22 assigned to device CPU, is_swa = 1

load_tensors: layer 23 assigned to device CPU, is_swa = 0

load_tensors: layer 24 assigned to device CPU, is_swa = 1

load_tensors: layer 25 assigned to device CPU, is_swa = 0

load_tensors: layer 26 assigned to device CPU, is_swa = 0

load_tensors: tensor 'token_embd.weight' (q6_K) (and 288 others) cannot be used with preferred buffer type CPU_REPACK, using CPU instead

load_tensors: CPU_Mapped model buffer size = 1623.67 MiB

.........................................................................

llama_context: constructing llama_context

llama_context: n_seq_max = 1

llama_context: n_ctx = 512

llama_context: n_ctx_per_seq = 512

llama_context: n_batch = 512

llama_context: n_ubatch = 512

llama_context: causal_attn = 1

llama_context: flash_attn = 0

llama_context: kv_unified = false

llama_context: freq_base = 10000.0

llama_context: freq_scale = 1

llama_context: n_ctx_per_seq (512) < n_ctx_train (8192) -- the full capacity of the model will not be utilized

set_abort_callback: call

llama_context: CPU output buffer size = 0.98 MiB

create_memory: n_ctx = 512 (padded)

llama_kv_cache_unified_iswa: using full-size SWA cache (ref: https://github.com/ggml-org/llama.cpp/pull/13194#issuecomment-2868343055)

llama_kv_cache_unified_iswa: creating non-SWA KV cache, size = 512 cells

llama_kv_cache_unified: layer 0: skipped

llama_kv_cache_unified: layer 1: dev = CPU

llama_kv_cache_unified: layer 2: skipped

llama_kv_cache_unified: layer 3: dev = CPU

llama_kv_cache_unified: layer 4: skipped

llama_kv_cache_unified: layer 5: dev = CPU

llama_kv_cache_unified: layer 6: skipped

llama_kv_cache_unified: layer 7: dev = CPU

llama_kv_cache_unified: layer 8: skipped

llama_kv_cache_unified: layer 9: dev = CPU

llama_kv_cache_unified: layer 10: skipped

llama_kv_cache_unified: layer 11: dev = CPU

llama_kv_cache_unified: layer 12: skipped

llama_kv_cache_unified: layer 13: dev = CPU

llama_kv_cache_unified: layer 14: skipped

llama_kv_cache_unified: layer 15: dev = CPU

llama_kv_cache_unified: layer 16: skipped

llama_kv_cache_unified: layer 17: dev = CPU

llama_kv_cache_unified: layer 18: skipped

llama_kv_cache_unified: layer 19: dev = CPU

llama_kv_cache_unified: layer 20: skipped

llama_kv_cache_unified: layer 21: dev = CPU

llama_kv_cache_unified: layer 22: skipped

llama_kv_cache_unified: layer 23: dev = CPU

llama_kv_cache_unified: layer 24: skipped

llama_kv_cache_unified: layer 25: dev = CPU

llama_kv_cache_unified: CPU KV buffer size = 26.00 MiB

llama_kv_cache_unified: size = 26.00 MiB ( 512 cells, 13 layers, 1/1 seqs), K (f16): 13.00 MiB, V (f16): 13.00 MiB

llama_kv_cache_unified_iswa: creating SWA KV cache, size = 512 cells

llama_kv_cache_unified: layer 0: dev = CPU

llama_kv_cache_unified: layer 1: skipped

llama_kv_cache_unified: layer 2: dev = CPU

llama_kv_cache_unified: layer 3: skipped

llama_kv_cache_unified: layer 4: dev = CPU

llama_kv_cache_unified: layer 5: skipped

llama_kv_cache_unified: layer 6: dev = CPU

llama_kv_cache_unified: layer 7: skipped

llama_kv_cache_unified: layer 8: dev = CPU

llama_kv_cache_unified: layer 9: skipped

llama_kv_cache_unified: layer 10: dev = CPU

llama_kv_cache_unified: layer 11: skipped

llama_kv_cache_unified: layer 12: dev = CPU

llama_kv_cache_unified: layer 13: skipped

llama_kv_cache_unified: layer 14: dev = CPU

llama_kv_cache_unified: layer 15: skipped

llama_kv_cache_unified: layer 16: dev = CPU

llama_kv_cache_unified: layer 17: skipped

llama_kv_cache_unified: layer 18: dev = CPU

llama_kv_cache_unified: layer 19: skipped

llama_kv_cache_unified: layer 20: dev = CPU

llama_kv_cache_unified: layer 21: skipped

llama_kv_cache_unified: layer 22: dev = CPU

llama_kv_cache_unified: layer 23: skipped

llama_kv_cache_unified: layer 24: dev = CPU

llama_kv_cache_unified: layer 25: skipped

llama_kv_cache_unified: CPU KV buffer size = 26.00 MiB

llama_kv_cache_unified: size = 26.00 MiB ( 512 cells, 13 layers, 1/1 seqs), K (f16): 13.00 MiB, V (f16): 13.00 MiB

llama_context: enumerating backends

llama_context: backend_ptrs.size() = 1

llama_context: max_nodes = 2304

llama_context: worst-case: n_tokens = 512, n_seqs = 1, n_outputs = 0

graph_reserve: reserving a graph for ubatch with n_tokens = 512, n_seqs = 1, n_outputs = 512

graph_reserve: reserving a graph for ubatch with n_tokens = 1, n_seqs = 1, n_outputs = 1

graph_reserve: reserving a graph for ubatch with n_tokens = 512, n_seqs = 1, n_outputs = 512

llama_context: CPU compute buffer size = 504.50 MiB

llama_context: graph nodes = 1128

llama_context: graph splits = 1

CPU : SSE3 = 1 | SSSE3 = 1 | LLAMAFILE = 1 | OPENMP = 1 | REPACK = 1 |

Model metadata: {'general.quantization_version': '2', 'tokenizer.ggml.add_space_prefix': 'false', 'tokenizer.chat_template': "{{ bos_token }}{% if messages[0]['role'] == 'system' %}{{ raise_exception('System role not supported') }}{% endif %}{% for message in messages %}{% if (message['role'] == 'user') != (loop.index0 % 2 == 0) %}{{ raise_exception('Conversation roles must alternate user/assistant/user/assistant/...') }}{% endif %}{% if (message['role'] == 'assistant') %}{% set role = 'model' %}{% else %}{% set role = message['role'] %}{% endif %}{{ '<start_of_turn>' + role + '\n' + message['content'] | trim + '<end_of_turn>\n' }}{% endfor %}{% if add_generation_prompt %}{{'<start_of_turn>model\n'}}{% endif %}", 'tokenizer.ggml.padding_token_id': '0', 'general.base_model.0.repo_url': 'https://huggingface.co/google/gemma-2-2b-it', 'general.license': 'gemma', 'gemma2.attn_logit_softcapping': '50.000000', 'tokenizer.ggml.add_bos_token': 'true', 'general.size_label': '2B', 'general.type': 'model', 'gemma2.embedding_length': '2304', 'gemma2.block_count': '26', 'tokenizer.ggml.pre': 'default', 'general.base_model.count': '1', 'general.base_model.0.organization': 'Google', 'general.basename': 'gemma-2', 'gemma2.context_length': '8192', 'general.architecture': 'gemma2', 'gemma2.feed_forward_length': '9216', 'gemma2.attention.head_count': '8', 'tokenizer.ggml.add_eos_token': 'false', 'gemma2.attention.head_count_kv': '4', 'general.base_model.0.name': 'Gemma 2 2b It', 'gemma2.attention.key_length': '256', 'gemma2.attention.value_length': '256', 'gemma2.attention.layer_norm_rms_epsilon': '0.000001', 'general.finetune': 'jpn-it', 'general.file_type': '15', 'gemma2.attention.sliding_window': '4096', 'gemma2.final_logit_softcapping': '30.000000', 'tokenizer.ggml.model': 'llama', 'general.name': 'Gemma 2 2b Jpn It', 'tokenizer.ggml.bos_token_id': '2', 'tokenizer.ggml.eos_token_id': '1', 'tokenizer.ggml.unknown_token_id': '3'}

Available chat formats from metadata: chat_template.default

Using gguf chat template: {{ bos_token }}{% if messages[0]['role'] == 'system' %}{{ raise_exception('System role not supported') }}{% endif %}{% for message in messages %}{% if (message['role'] == 'user') != (loop.index0 % 2 == 0) %}{{ raise_exception('Conversation roles must alternate user/assistant/user/assistant/...') }}{% endif %}{% if (message['role'] == 'assistant') %}{% set role = 'model' %}{% else %}{% set role = message['role'] %}{% endif %}{{ '<start_of_turn>' + role + '

' + message['content'] | trim + '<end_of_turn>

' }}{% endfor %}{% if add_generation_prompt %}{{'<start_of_turn>model

'}}{% endif %}

Using chat eos_token: <eos>

Using chat bos_token: <bos>

llama_perf_context_print: load time = 1394.68 ms

llama_perf_context_print: prompt eval time = 1394.48 ms / 3 tokens ( 464.83 ms per token, 2.15 tokens per second)

llama_perf_context_print: eval time = 1267.61 ms / 2 runs ( 633.81 ms per token, 1.58 tokens per second)

llama_perf_context_print: total time = 2672.58 ms / 5 tokens

llama_perf_context_print: graphs reused = 1

{'id': 'cmpl-b95b0d16-5f87-458a-8715-d90b48e6d4ac', 'object': 'text_completion', 'created': 1771488001, 'model': './gemma-2-2b-jpn-it-Q4_K_M.gguf', 'choices': [{'text': 'こんにちは、どうも!', 'index': 0, 'logprobs': None, 'finish_reason': 'stop'}], 'usage': {'prompt_tokens': 3, 'completion_tokens': 3, 'total_tokens': 6}}無事になにか返ってきました。

ちょっとコードを修正して、時間を測定してみます。

from llama_cpp import Llama

import time

# 処理時間の計測開始

start_time = time.time()

# ダウンロードしたモデルへのパスを指定

llm = Llama(model_path="./gemma-2-2b-jpn-it-Q4_K_M.gguf")

output = llm("こんにちは、", max_tokens=32, stop=["\n"], echo=True)

# 処理時間計測終了

end_time = time.time()

# 応答表示

print(output['choices'][0]['text'])

print(f"処理時間:{end_time - start_time:.2f} 秒")実行結果は

こんにちは、どうも!

処理時間:3.70 秒わぉ、それなりの時間ですねぇ。

皆さんの環境ではどんな感じでしょうか?

ちなみに、第3世代の16GBを搭載したIntel i7-3720QMのマシンでは、1.54秒でした。